written by Alex Stocks on 2015/07/19,版权所有,无授权不得转载

redis启动流程:

int main(int argc, char **argv) {

// 初始化conf

initServerConfig();

// 加载配置

if (argc >= 2) {

loadServerConfig(configfile,options);

sdsfree(options);

}

// 启动server

initServer();

// 启动linux的内存优化项[overcommit_memory & transparent_huge_page],从磁盘加载数据

if (!server.sentinel_mode) {

/* Things not needed when running in Sentinel mode. */

redisLog(REDIS_WARNING,"Server started, Redis version " REDIS_VERSION);

#ifdef __linux__

linuxMemoryWarnings();

#endif

checkTcpBacklogSettings();

loadDataFromDisk();

}

// 开足马力,运转发动机

aeSetBeforeSleepProc(server.el,beforeSleep);

aeMain(server.el);

aeDeleteEventLoop(server.el);

return 0;

}

初始化server.runid,并给replication相关参数赋值。

Linux中的随机数可以从两个特殊的文件中产生,一个是/dev/urandom.另外一个是/dev/random。他们产生随机数的原理是利用当前系统的熵池来计算出固定一定数量的随机比特,然后将这些比特作为字节流返回。

熵池就是当前系统的环境噪音,熵指的是一个系统的混乱程度,系统噪音可以通过很多参数来评估,如内存的使用,文件的使用量,不同类型的进程数量等等。如果当前环境噪音变化的不是很剧烈或者当前环境噪音很小,比如刚开机的时候,而当前需要大量的随机比特,这时产生的随机数的随机效果就不是很好了。这就是为什么会有/dev/urandom和/dev/random这两种不同的文件,后者在不能产生新的随机数时会阻塞程序,而前者不会(ublock),当然产生的随机数效果就不太好了,这对加密解密这样的应用来说就不是一种很好的选择。

/dev/random会阻塞当前的程序,直到根据熵池产生新的随机字节之后才返回,所以使用/dev/random比使用/dev/urandom产生大量随机数的速度要慢。

/*

* 这个函数为一个redis instance生成一个160bit的id

* (如果未来显示用则是320bit,即40个字母长度的可显示字符串)。

*/

void getRandomHexChars(char *p, unsigned int len);

{

char *charset = "0123456789abcdef";

unsigned int j;

/* Global state. */

static int seed_initialized = 0;

static unsigned char seed[20]; /* The SHA1 seed, from /dev/urandom. */

static uint64_t counter = 0; /* The counter we hash with the seed. */

// 生成一个随机种子数,存储到seed里面

if (!seed_initialized) {

FILE *fp = fopen("/dev/urandom", "r");

if (fp && fread(seed, sizeof(seed), 1, fp) == 1)

seed_initialized = 1;

if (fp) fclose(fp);

}

if (seed_initialized) {

// 如果从/dev/urandom读取到了随机字符串,则利用SHA算法生成一个id

while (len) {

// len可能并不是20或者40字节的倍数,所以这里要通过循环把p的内容填满

unsigned char digest[20];

SHA1_CTX ctx;

unsigned int copylen = len > 20 ? 20 : len;

SHA1Init(&ctx);

SHA1Update(&ctx, seed, sizeof(seed));

SHA1Update(&ctx, (unsigned char*)&counter, sizeof(counter));

SHA1Final(digest, &ctx);

counter++;

memcpy(p, digest, copylen);

/* Convert to hex digits. */

// 把数字转化为可读字符串,只是这里只用了一个字节的后半部分

for (j = 0; j < copylen; j++) p[j] = charset[p[j] & 0x0F];

// 移动光标

len -= copylen;

p += copylen;

}

}

else {

// 如果从/dev/urandom读取随机字符串失败,则利用时间和当前进程的id来生成一个随机字符串

char *x = p;

unsigned int l = len;

struct timeval tv;

pid_t pid = getpid();

/* Use time and PID to fill the initial array. */

// 先在buf中填充时间的秒和微秒两个部分,然后再补充上进程的id

gettimeofday(&tv, NULL);

if (l >= sizeof(tv.tv_usec)) {

memcpy(x, &tv.tv_usec, sizeof(tv.tv_usec));

l -= sizeof(tv.tv_usec);

x += sizeof(tv.tv_usec);

}

if (l >= sizeof(tv.tv_sec)) {

memcpy(x, &tv.tv_sec, sizeof(tv.tv_sec));

l -= sizeof(tv.tv_sec);

x += sizeof(tv.tv_sec);

}

if (l >= sizeof(pid)) {

memcpy(x, &pid, sizeof(pid));

l -= sizeof(pid);

x += sizeof(pid);

}

// 再利用随机数进行异或后,转化为16进制可视字符串

for (j = 0; j < len; j++) {

p[j] ^= rand();

p[j] = charset[p[j] & 0x0F];

}

}

}

void initServerConfig(void) {

int j;

getRandomHexChars(server.runid,REDIS_RUN_ID_SIZE);

server.configfile = NULL;

server.hz = REDIS_DEFAULT_HZ;

server.runid[REDIS_RUN_ID_SIZE] = '\0';

server.arch_bits = (sizeof(long) == 8) ? 64 : 32;

server.port = REDIS_SERVERPORT;

server.repl_ping_slave_period = REDIS_REPL_PING_SLAVE_PERIOD;

server.repl_timeout = REDIS_REPL_TIMEOUT;

server.repl_min_slaves_to_write = REDIS_DEFAULT_MIN_SLAVES_TO_WRITE;

server.repl_min_slaves_max_lag = REDIS_DEFAULT_MIN_SLAVES_MAX_LAG;

/* Replication related */

server.masterauth = NULL;

server.masterhost = NULL;

server.masterport = 6379;

server.master = NULL;

server.cached_master = NULL;

server.repl_master_initial_offset = -1;

server.repl_state = REDIS_REPL_NONE;

server.repl_syncio_timeout = REDIS_REPL_SYNCIO_TIMEOUT;

server.repl_serve_stale_data = REDIS_DEFAULT_SLAVE_SERVE_STALE_DATA;

server.repl_slave_ro = REDIS_DEFAULT_SLAVE_READ_ONLY;

server.repl_down_since = 0; /* Never connected, repl is down since EVER. */

server.repl_disable_tcp_nodelay = REDIS_DEFAULT_REPL_DISABLE_TCP_NODELAY;

server.repl_diskless_sync = REDIS_DEFAULT_REPL_DISKLESS_SYNC;

server.repl_diskless_sync_delay = REDIS_DEFAULT_REPL_DISKLESS_SYNC_DELAY;

server.slave_priority = REDIS_DEFAULT_SLAVE_PRIORITY;

server.master_repl_offset = 0;

/* Replication partial resync backlog */

server.repl_backlog = NULL;

server.repl_backlog_size = REDIS_DEFAULT_REPL_BACKLOG_SIZE;

server.repl_backlog_histlen = 0;

server.repl_backlog_idx = 0;

server.repl_backlog_off = 0;

server.repl_backlog_time_limit = REDIS_DEFAULT_REPL_BACKLOG_TIME_LIMIT;

server.repl_no_slaves_since = time(NULL);

/* write index */

// server.write_index.start = (long long)((long long)(0XE20150620180647F) * getpid() * server.port * time(NULL));

server.write_index.start = 0;

server.write_index.current = server.write_index.start;

server.slave_write_index = dictCreate(&slaveWriteIndexDictType,NULL);

}

如果进程启动的时候已经被赋予了redis的options,则使用这些options,不再读conf文件。

void loadServerConfig(char *filename, char *options) {

sds config = sdsempty();

char buf[REDIS_CONFIGLINE_MAX+1];

/* Load the file content */

if (filename) {

FILE *fp;

// 如果文件名称为空,则从stdin读取

if (filename[0] == '-' && filename[1] == '\0') {

fp = stdin;

} else {

if ((fp = fopen(filename,"r")) == NULL) {

redisLog(REDIS_WARNING,

"Fatal error, can't open config file '%s'", filename);

exit(1);

}

}

while(fgets(buf,REDIS_CONFIGLINE_MAX+1,fp) != NULL)

config = sdscat(config,buf);

if (fp != stdin) fclose(fp);

}

/* Append the additional options */

if (options) {

config = sdscat(config,"\n");

config = sdscat(config,options);

}

loadServerConfigFromString(config);

sdsfree(config);

}

这个函数中比较重要的就是如果redis的role是slave,则获取master的host和port,并把state设置为REDISREPLCONNECT

void loadServerConfigFromString(char *config) {

char *err = NULL;

int linenum = 0, totlines, i;

int slaveof_linenum = 0;

sds *lines;

// 按照行进行分割,结果存在lines数组中,行数为totlines

lines = sdssplitlen(config,strlen(config),"\n",1,&totlines);

for (i = 0; i < totlines; i++) {

sds *argv;

int argc;

linenum = i+1; //记录行号,一旦出错,下面的loaderr就能说明出错所在的行号

// 去掉tab、换行、回车等空格键

lines[i] = sdstrim(lines[i]," \t\r\n");

// 不处理空行和注释行

if (lines[i][0] == '#' || lines[i][0] == '\0') continue;

// 把每行再进行分割,分割结果存进@argv数组,数组elem个数为args

argv = sdssplitargs(lines[i],&argc);

if (argv == NULL) { // 处理argv为空的情况

err = "Unbalanced quotes in configuration line";

goto loaderr;

}

if (argc == 0) { // 处理element number为0的情况

sdsfreesplitres(argv,argc);

continue;

}

sdstolower(argv[0]); // 把line key转换为消息

/* Execute config directives */

if (!strcasecmp(argv[0],"slaveof") && argc == 3) {

slaveof_linenum = linenum;

server.masterhost = sdsnew(argv[1]);

server.masterport = atoi(argv[2]);

server.repl_state = REDIS_REPL_CONNECT;

} else if (!strcasecmp(argv[0],"repl-ping-slave-period") && argc == 2) {

server.repl_ping_slave_period = atoi(argv[1]);

if (server.repl_ping_slave_period <= 0) {

err = "repl-ping-slave-period must be 1 or greater";

goto loaderr;

}

} else if (!strcasecmp(argv[0],"repl-timeout") && argc == 2) {

server.repl_timeout = atoi(argv[1]);

if (server.repl_timeout <= 0) {

err = "repl-timeout must be 1 or greater";

goto loaderr;

}

} else if (!strcasecmp(argv[0],"repl-disable-tcp-nodelay") && argc==2) {

if ((server.repl_disable_tcp_nodelay = yesnotoi(argv[1])) == -1) {

err = "argument must be 'yes' or 'no'"; goto loaderr;

}

} else if (!strcasecmp(argv[0],"repl-diskless-sync") && argc==2) {

if ((server.repl_diskless_sync = yesnotoi(argv[1])) == -1) {

err = "argument must be 'yes' or 'no'"; goto loaderr;

}

} else if (!strcasecmp(argv[0],"repl-diskless-sync-delay") && argc==2) {

server.repl_diskless_sync_delay = atoi(argv[1]);

if (server.repl_diskless_sync_delay < 0) {

err = "repl-diskless-sync-delay can't be negative";

goto loaderr;

}

} else if (!strcasecmp(argv[0],"repl-backlog-size") && argc == 2) {

long long size = memtoll(argv[1],NULL);

if (size <= 0) {

err = "repl-backlog-size must be 1 or greater.";

goto loaderr;

}

resizeReplicationBacklog(size);

} else if (!strcasecmp(argv[0],"repl-backlog-ttl") && argc == 2) {

server.repl_backlog_time_limit = atoi(argv[1]);

if (server.repl_backlog_time_limit < 0) {

err = "repl-backlog-ttl can't be negative ";

goto loaderr;

}

}

else {

err = "Bad directive or wrong number of arguments"; goto loaderr;

}

// 释放element数组

sdsfreesplitres(argv,argc);

}

// 释放line数组

sdsfreesplitres(lines,totlines);

return;

loaderr:

fprintf(stderr, "\n*** FATAL CONFIG FILE ERROR ***\n");

fprintf(stderr, "Reading the configuration file, at line %d\n", linenum);

fprintf(stderr, ">>> '%s'\n", lines[i]);

fprintf(stderr, "%s\n", err);

exit(1);

}static int anetListen(char *err, int s, struct sockaddr *sa, socklen_t len, int backlog) {

if (bind(s,sa,len) == -1) {

anetSetError(err, "bind: %s", strerror(errno));

close(s);

return ANET_ERR;

}

if (listen(s, backlog) == -1) {

anetSetError(err, "listen: %s", strerror(errno));

close(s);

return ANET_ERR;

}

return ANET_OK;

}

static int _anetTcpServer(char *err, int port, char *bindaddr, int af, int backlog)

{

int s, rv;

char _port[6]; /* strlen("65535") */

struct addrinfo hints, *servinfo, *p;

snprintf(_port,6,"%d",port);

memset(&hints,0,sizeof(hints));

hints.ai_family = af;

hints.ai_socktype = SOCK_STREAM;

hints.ai_flags = AI_PASSIVE; /* No effect if bindaddr != NULL */

if ((rv = getaddrinfo(bindaddr,_port,&hints,&servinfo)) != 0) {

anetSetError(err, "%s", gai_strerror(rv));

return ANET_ERR;

}

for (p = servinfo; p != NULL; p = p->ai_next) {

if ((s = socket(p->ai_family,p->ai_socktype,p->ai_protocol)) == -1)

continue;

if (af == AF_INET6 && anetV6Only(err,s) == ANET_ERR) goto error;

if (anetSetReuseAddr(err,s) == ANET_ERR) goto error;

if (anetListen(err,s,p->ai_addr,p->ai_addrlen,backlog) == ANET_ERR) goto error;

goto end;

}

if (p == NULL) {

anetSetError(err, "unable to bind socket");

goto error;

}

error:

s = ANET_ERR;

end:

freeaddrinfo(servinfo);

return s;

}

int anetTcpServer(char *err, int port, char *bindaddr, int backlog)

{

return _anetTcpServer(err, port, bindaddr, AF_INET, backlog);

}

int listenToPort(int port, int *fds, int *count) {

int j;

/* Force binding of 0.0.0.0 if no bind address is specified, always

* entering the loop if j == 0. */

if (server.bindaddr_count == 0) server.bindaddr[0] = NULL;

for (j = 0; j < server.bindaddr_count || j == 0; j++) {

if (server.bindaddr[j] == NULL) {

/* Bind * for both IPv6 and IPv4, we enter here only if

* server.bindaddr_count == 0. */

fds[*count] = anetTcp6Server(server.neterr,port,NULL,

server.tcp_backlog);

if (fds[*count] != ANET_ERR) {

anetNonBlock(NULL,fds[*count]);

(*count)++;

}

fds[*count] = anetTcpServer(server.neterr,port,NULL,

server.tcp_backlog);

if (fds[*count] != ANET_ERR) {

anetNonBlock(NULL,fds[*count]);

(*count)++;

}

/* Exit the loop if we were able to bind * on IPv4 or IPv6,

* otherwise fds[*count] will be ANET_ERR and we'll print an

* error and return to the caller with an error. */

if (*count) break;

} else if (strchr(server.bindaddr[j],':')) {

/* Bind IPv6 address. */

fds[*count] = anetTcp6Server(server.neterr,port,server.bindaddr[j],

server.tcp_backlog);

} else {

/* Bind IPv4 address. */

fds[*count] = anetTcpServer(server.neterr,port,server.bindaddr[j],

server.tcp_backlog);

}

if (fds[*count] == ANET_ERR) {

redisLog(REDIS_WARNING,

"Creating Server TCP listening socket %s:%d: %s",

server.bindaddr[j] ? server.bindaddr[j] : "*",

port, server.neterr);

return REDIS_ERR;

}

anetNonBlock(NULL,fds[*count]);

(*count)++;

}

return REDIS_OK;

}

void initServer(void) {

/* Open the TCP listening socket for the user commands. */

if (server.port != 0 &&

listenToPort(server.port,server.ipfd,&server.ipfd_count) == REDIS_ERR)

exit(1);

}

}void initServer(void) {

/* Create an event handler for accepting new connections in TCP and Unix

* domain sockets. */

for (j = 0; j < server.ipfd_count; j++) {

if (aeCreateFileEvent(server.el, server.ipfd[j], AE_READABLE,

acceptTcpHandler,NULL) == AE_ERR)

{

redisPanic(

"Unrecoverable error creating server.ipfd file event.");

}

}

}static int anetGenericAccept(char *err, int s, struct sockaddr *sa, socklen_t *len) {

int fd;

while(1) {

fd = accept(s,sa,len);

if (fd == -1) {

if (errno == EINTR)

continue;

else {

anetSetError(err, "accept: %s", strerror(errno));

return ANET_ERR;

}

}

break;

}

return fd;

}

int anetTcpAccept(char *err, int s, char *ip, size_t ip_len, int *port) {

int fd;

struct sockaddr_storage sa;

socklen_t salen = sizeof(sa);

if ((fd = anetGenericAccept(err,s,(struct sockaddr*)&sa,&salen)) == -1)

return ANET_ERR;

if (sa.ss_family == AF_INET) {

struct sockaddr_in *s = (struct sockaddr_in *)&sa;

if (ip) inet_ntop(AF_INET,(void*)&(s->sin_addr),ip,ip_len);

if (port) *port = ntohs(s->sin_port);

} else {

struct sockaddr_in6 *s = (struct sockaddr_in6 *)&sa;

if (ip) inet_ntop(AF_INET6,(void*)&(s->sin6_addr),ip,ip_len);

if (port) *port = ntohs(s->sin6_port);

}

return fd;

}创建客户端后注册接受client的请求的回调函数readQueryFromClient,最后把客户端放入server的客户端集合:server.clients。

如果client集合超限[默认是10000]就给client返回err msg,然后再释放client句柄并关闭连接。

redisClient *createClient(int fd) {

redisClient *c = zmalloc(sizeof(redisClient));

/* passing -1 as fd it is possible to create a non connected client.

* This is useful since all the Redis commands needs to be executed

* in the context of a client. When commands are executed in other

* contexts (for instance a Lua script) we need a non connected client. */

if (fd != -1) {

anetNonBlock(NULL,fd);

// 把fd设置为nodelay类型,有利于数据及时发送给客户端

anetEnableTcpNoDelay(NULL,fd);

if (server.tcpkeepalive)

anetKeepAlive(NULL,fd,server.tcpkeepalive);

// 注册接受客户端请求的函数

if (aeCreateFileEvent(server.el,fd,AE_READABLE,

readQueryFromClient, c) == AE_ERR)

{

close(fd);

zfree(c);

return NULL;

}

}

// 把外部请求客户端放入客户端集合,即fake client是不会被放进去的

if (fd != -1) listAddNodeTail(server.clients,c);

initClientMultiState(c);

return c;

}

#define MAX_ACCEPTS_PER_CALL 1000

static void acceptCommonHandler(int fd, int flags) {

redisClient *c;

if ((c = createClient(fd)) == NULL) {

redisLog(REDIS_WARNING,

"Error registering fd event for the new client: %s (fd=%d)",

strerror(errno),fd);

close(fd); /* May be already closed, just ignore errors */

return;

}

/* If maxclient directive is set and this is one client more... close the

* connection. Note that we create the client instead to check before

* for this condition, since now the socket is already set in non-blocking

* mode and we can send an error for free using the Kernel I/O */

// 如果连接过多[#define REDIS_MAX_CLIENTS 10000],先给client发送一个error msg,

// 然后就把连接代表的client给free掉

//

// 这里解释了为何最后才检查超限的原因:需要给客户端发送error message,以让用户明白错误的原因。

if (listLength(server.clients) > server.maxclients) {

char *err = "-ERR max number of clients reached\r\n";

/* That's a best effort error message, don't check write errors */

if (write(c->fd,err,strlen(err)) == -1) {

/* Nothing to do, Just to avoid the warning... */

}

server.stat_rejected_conn++;

freeClient(c);

return;

}

server.stat_numconnections++;

c->flags |= flags;

}目前逻辑步骤是:接受客户端的请求;创建对应的client句柄;插入client集合;检查client集合是否超限。

那为什么不先检查server.clients的数目后直接把超限的连接请求给拒绝掉呢?

acceptCommonHandler()函数里给出了一段解释:需要给客户端发送error message,以让用户明白错误的原因。

// 接受外部请求,一次最多接受1000个请求

void acceptTcpHandler(aeEventLoop *el, int fd, void *privdata, int mask) {

int cport, cfd, max = MAX_ACCEPTS_PER_CALL;

char cip[REDIS_IP_STR_LEN];

REDIS_NOTUSED(el);

REDIS_NOTUSED(mask);

REDIS_NOTUSED(privdata);

while(max--) {

cfd = anetTcpAccept(server.neterr, fd, cip, sizeof(cip), &cport);

if (cfd == ANET_ERR) {

if (errno != EWOULDBLOCK)

redisLog(REDIS_WARNING,

"Accepting client connection: %s", server.neterr);

return;

}

redisLog(REDIS_VERBOSE,"Accepted %s:%d", cip, cport);

acceptCommonHandler(cfd,0);

}

}overcommitmemory文件指定了内核针对内存分配的策略,overcommitmemory值可以是0、1、2。

int linuxOvercommitMemoryValue(void) {

FILE *fp = fopen("/proc/sys/vm/overcommit_memory","r");

char buf[64];

if (!fp) return -1;

if (fgets(buf,64,fp) == NULL) {

fclose(fp);

return -1;

}

fclose(fp);

return atoi(buf);

}Transparent Huge Page用户合并物理内存的page

内核线程khugepaged周期性自动扫描内存,自动将地址连续可以合并的4KB的普通Page并成2MB的Huge Page。

Redhat系统,通过内核参数/sys/kernel/mm/redhattransparenthugepage/enabled打开.

其他Linux系统,通过内核参数/sys/kernel/mm/transparent_hugepage/enabled打开.

/* Returns 1 if Transparent Huge Pages support is enabled in the kernel.

* Otherwise (or if we are unable to check) 0 is returned. */

int THPIsEnabled(void) {

char buf[1024];

FILE *fp = fopen("/sys/kernel/mm/transparent_hugepage/enabled","r");

if (!fp) return 0;

if (fgets(buf,sizeof(buf),fp) == NULL) {

fclose(fp);

return 0;

}

fclose(fp);

return (strstr(buf,"[never]") == NULL) ? 1 : 0;

}void linuxMemoryWarnings(void) {

if (linuxOvercommitMemoryValue() == 0) {

redisLog(REDIS_WARNING,"WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.");

}

if (THPIsEnabled()) {

redisLog(REDIS_WARNING,"WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.");

}

}/* Function called at startup to load RDB or AOF file in memory. */

void loadDataFromDisk(void) {

long long start = ustime();

if (server.aof_state == REDIS_AOF_ON) {

// aof模式下记载aof文件

if (loadAppendOnlyFile(server.aof_filename) == REDIS_OK)

redisLog(REDIS_NOTICE,"DB loaded from append only file: %.3f seconds",(float)(ustime()-start)/1000000);

} else {

// 加载rdb文件

if (rdbLoad(server.rdb_filename) == REDIS_OK) {

redisLog(REDIS_NOTICE,"DB loaded from disk: %.3f seconds",

(float)(ustime()-start)/1000000);

} else if (errno != ENOENT) {

redisLog(REDIS_WARNING,"Fatal error loading the DB: %s. Exiting.",strerror(errno));

exit(1);

}

}

}fake client用于aof模式下load aof文件的时候,重放客户端请求然后把数据写进内存。redis不会对其进行回复。

int prepareClientToWrite(redisClient *c) {

if (c->fd <= 0) return REDIS_ERR; /* Fake client for AOF loading. */

}

// 在给client回复的时候,如果判别出是fake clent则不会对client进行回复

void addReply(redisClient *c, robj *obj) {

if (prepareClientToWrite(c) != REDIS_OK) return;

}

/* In Redis commands are always executed in the context of a client, so in

* order to load the append only file we need to create a fake client. */

// 创建一个fake client,用于重放aof文件

struct redisClient *createFakeClient(void) {

struct redisClient *c = zmalloc(sizeof(*c));

selectDb(c,0);

c->fd = -1; // redis根据fd判断其是否为一个fake client

c->name = NULL;

c->querybuf = sdsempty();

c->querybuf_peak = 0;

c->argc = 0;

c->argv = NULL;

c->bufpos = 0;

c->flags = 0;

c->btype = REDIS_BLOCKED_NONE;

/* We set the fake client as a slave waiting for the synchronization

* so that Redis will not try to send replies to this client. */

c->replstate = REDIS_REPL_WAIT_BGSAVE_START;

c->reply = listCreate();

c->reply_bytes = 0;

c->obuf_soft_limit_reached_time = 0;

c->watched_keys = listCreate();

c->peerid = NULL;

listSetFreeMethod(c->reply,decrRefCountVoid);

listSetDupMethod(c->reply,dupClientReplyValue);

initClientMultiState(c);

return c;

}/* Mark that we are loading in the global state and setup the fields

* needed to provide loading stats. */

// 设置loading flag为1

void startLoading(FILE *fp) {

struct stat sb;

/* Load the DB */

server.loading = 1;

server.loading_start_time = time(NULL);

server.loading_loaded_bytes = 0;

if (fstat(fileno(fp), &sb) == -1) {

server.loading_total_bytes = 0;

} else {

server.loading_total_bytes = sb.st_size;

}

}

/* Loading finished */

// 设置loading flag为0

void stopLoading(void) {

server.loading = 0;

}

/* This function is called by Redis in order to process a few events from

* time to time while blocked into some not interruptible operation.

* This allows to reply to clients with the -LOADING error while loading the

* data set at startup or after a full resynchronization with the master

* and so forth.

*

* It calls the event loop in order to process a few events. Specifically we

* try to call the event loop for times as long as we receive acknowledge that

* some event was processed, in order to go forward with the accept, read,

* write, close sequence needed to serve a client.

*

* The function returns the total number of events processed. */

// 阻塞在某个任务上时,抽出时间执行一些外部的请求任务

int processEventsWhileBlocked(void) {

int iterations = 4; /* See the function top-comment. */

int count = 0;

while (iterations--) {

int events = aeProcessEvents(server.el, AE_FILE_EVENTS|AE_DONT_WAIT);

if (!events) break;

count += events;

}

return count;

}

/* Replay the append log file. On success REDIS_OK is returned. On non fatal

* error (the append only file is zero-length) REDIS_ERR is returned. On

* fatal error an error message is logged and the program exists. */

// 重放aof文件,成功返回REDIS_OK,遇到fatal error[aof文件大小为0]则返回REDIS_ERR

// 遇到fatal error,则把错误信息写入log,redis退出

int loadAppendOnlyFile(char *filename) {

struct redisClient *fakeClient;

FILE *fp = fopen(filename,"r");

struct redis_stat sb;

int old_aof_state = server.aof_state;

long loops = 0;

off_t valid_up_to = 0; /* Offset of the latest well-formed command loaded. */

// 文件大小为0

if (fp && redis_fstat(fileno(fp),&sb) != -1 && sb.st_size == 0) {

server.aof_current_size = 0;

fclose(fp);

return REDIS_ERR;

}

if (fp == NULL) {

redisLog(REDIS_WARNING,"Fatal error: can't open the append log file for reading: %s",strerror(errno));

exit(1);

}

/* Temporarily disable AOF, to prevent EXEC from feeding a MULTI

* to the same file we're about to read. */

// 设置状态为REDIS_AOF_OFF,防止redis向aof文件写数据

server.aof_state = REDIS_AOF_OFF;

fakeClient = createFakeClient();

startLoading(fp); // 设置server.loading为1

while(1) {

int argc, j;

unsigned long len;

robj **argv;

char buf[128];

sds argsds;

struct redisCommand *cmd;

/* Serve the clients from time to time */

if (!(loops++ % 1000)) {

loadingProgress(ftello(fp)); // server.loading_loaded_bytes = pos;

processEventsWhileBlocked();

}

if (fgets(buf,sizeof(buf),fp) == NULL) {

if (feof(fp))

break;

else

goto readerr;

}

if (buf[0] != '*') goto fmterr;

if (buf[1] == '\0') goto readerr;

argc = atoi(buf+1); // 有效参数个数

if (argc < 1) goto fmterr;

argv = zmalloc(sizeof(robj*)*argc);

fakeClient->argc = argc;

fakeClient->argv = argv;

// 循环读出所有的参数

for (j = 0; j < argc; j++) {

if (fgets(buf,sizeof(buf),fp) == NULL) {

fakeClient->argc = j; /* Free up to j-1. */

freeFakeClientArgv(fakeClient);

goto readerr;

}

if (buf[0] != '$') goto fmterr;

len = strtol(buf+1,NULL,10);

argsds = sdsnewlen(NULL,len);

if (len && fread(argsds,len,1,fp) == 0) {

sdsfree(argsds);

fakeClient->argc = j; /* Free up to j-1. */

freeFakeClientArgv(fakeClient);

goto readerr;

}

argv[j] = createObject(REDIS_STRING,argsds); // 为某个参数赋值

if (fread(buf,2,1,fp) == 0) {

fakeClient->argc = j+1; /* Free up to j. */

freeFakeClientArgv(fakeClient);

goto readerr; /* discard CRLF */

}

}

/* Command lookup */

// 查找命令是否有效

cmd = lookupCommand(argv[0]->ptr);

if (!cmd) {

redisLog(REDIS_WARNING,"Unknown command '%s' reading the append only file", (char*)argv[0]->ptr);

exit(1);

}

/* Run the command in the context of a fake client */

// 重放命令

cmd->proc(fakeClient);

/* The fake client should not have a reply */

// fake client不应该收到reply

redisAssert(fakeClient->bufpos == 0 && listLength(fakeClient->reply) == 0);

/* The fake client should never get blocked */

// fake client不应该处于blocked模式

redisAssert((fakeClient->flags & REDIS_BLOCKED) == 0);

/* Clean up. Command code may have changed argv/argc so we use the

* argv/argc of the client instead of the local variables. */

// 释放假连接的argv资源

freeFakeClientArgv(fakeClient);

if (server.aof_load_truncated) valid_up_to = ftello(fp);

}

/* This point can only be reached when EOF is reached without errors.

* If the client is in the middle of a MULTI/EXEC, log error and quit. */

if (fakeClient->flags & REDIS_MULTI) goto uxeof;

loaded_ok: /* DB loaded, cleanup and return REDIS_OK to the caller. */

fclose(fp);

freeFakeClient(fakeClient);

server.aof_state = old_aof_state;

stopLoading();

aofUpdateCurrentSize();

server.aof_rewrite_base_size = server.aof_current_size;

return REDIS_OK;

readerr: /* Read error. If feof(fp) is true, fall through to unexpected EOF. */

if (!feof(fp)) {

redisLog(REDIS_WARNING,"Unrecoverable error reading the append only file: %s", strerror(errno));

exit(1);

}

uxeof: /* Unexpected AOF end of file. */

if (server.aof_load_truncated) {

redisLog(REDIS_WARNING,"!!! Warning: short read while loading the AOF file !!!");

redisLog(REDIS_WARNING,"!!! Truncating the AOF at offset %llu !!!",

(unsigned long long) valid_up_to);

if (valid_up_to == -1 || truncate(filename,valid_up_to) == -1) {

if (valid_up_to == -1) {

redisLog(REDIS_WARNING,"Last valid command offset is invalid");

} else {

redisLog(REDIS_WARNING,"Error truncating the AOF file: %s",

strerror(errno));

}

} else {

/* Make sure the AOF file descriptor points to the end of the

* file after the truncate call. */

if (server.aof_fd != -1 && lseek(server.aof_fd,0,SEEK_END) == -1) {

redisLog(REDIS_WARNING,"Can't seek the end of the AOF file: %s",

strerror(errno));

} else {

redisLog(REDIS_WARNING,

"AOF loaded anyway because aof-load-truncated is enabled");

goto loaded_ok;

}

}

}

redisLog(REDIS_WARNING,"Unexpected end of file reading the append only file. You can: 1) Make a backup of your AOF file, then use ./redis-check-aof --fix <filename>. 2) Alternatively you can set the 'aof-load-truncated' configuration option to yes and restart the server.");

exit(1);

fmterr: /* Format error. */

redisLog(REDIS_WARNING,"Bad file format reading the append only file: make a backup of your AOF file, then use ./redis-check-aof --fix <filename>");

exit(1);

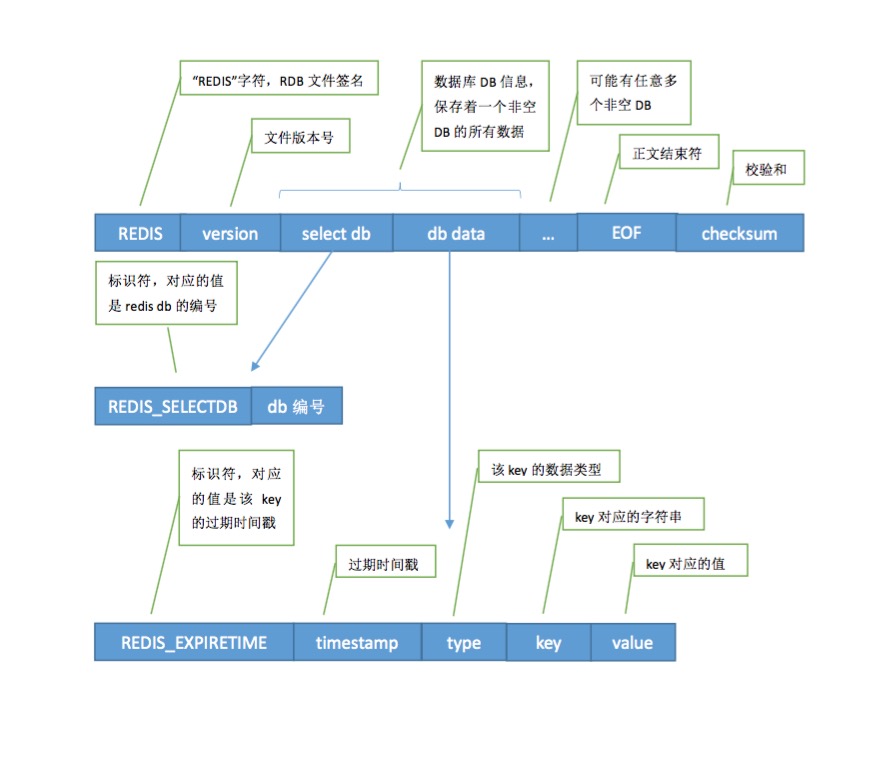

}rdb文件中有这两个字段:repl-id和repl-offset,对应redis代码中masterreplid和masterrepl_offsetreplication一文中说明这两个字段唯一的标记了redis master的一组dataset。

如果rdb文件不存在,则redis启动的时候初始offset为0;存在,则id和offset继承自rdb文件中的这两个字段值。

rdb的文件格式如下:

int rdbLoad(char *filename) {

uint32_t dbid;

int type, rdbver;

redisDb *db = server.db+0;

char buf[1024];

long long expiretime, now = mstime();

FILE *fp;

rio rdb;

if ((fp = fopen(filename,"r")) == NULL) return REDIS_ERR;

rioInitWithFile(&rdb,fp);

rdb.update_cksum = rdbLoadProgressCallback;

rdb.max_processing_chunk = server.loading_process_events_interval_bytes;

if (rioRead(&rdb,buf,9) == 0) goto eoferr;

buf[9] = '\0';

// 检查文件开头

if (memcmp(buf,"REDIS",5) != 0) {

fclose(fp);

redisLog(REDIS_WARNING,"Wrong signature trying to load DB from file");

errno = EINVAL;

return REDIS_ERR;

}

rdbver = atoi(buf+5);

if (rdbver < 1 || rdbver > REDIS_RDB_VERSION) {

fclose(fp);

redisLog(REDIS_WARNING,"Can't handle RDB format version %d",rdbver);

errno = EINVAL;

return REDIS_ERR;

}

startLoading(fp);

while(1) {

robj *key, *val;

expiretime = -1;

/* Read type. */

// 读取key的超时时间

if ((type = rdbLoadType(&rdb)) == -1) goto eoferr;

if (type == REDIS_RDB_OPCODE_EXPIRETIME) {

if ((expiretime = rdbLoadTime(&rdb)) == -1) goto eoferr;

/* We read the time so we need to read the object type again. */

if ((type = rdbLoadType(&rdb)) == -1) goto eoferr;

/* the EXPIRETIME opcode specifies time in seconds, so convert

* into milliseconds. */

expiretime *= 1000;

} else if (type == REDIS_RDB_OPCODE_EXPIRETIME_MS) {

/* Milliseconds precision expire times introduced with RDB

* version 3. */

if ((expiretime = rdbLoadMillisecondTime(&rdb)) == -1) goto eoferr;

/* We read the time so we need to read the object type again. */

if ((type = rdbLoadType(&rdb)) == -1) goto eoferr;

}

// 到了文件末尾,终止流程

if (type == REDIS_RDB_OPCODE_EOF)

break;

/* Handle SELECT DB opcode as a special case */

// 切换db

if (type == REDIS_RDB_OPCODE_SELECTDB) {

if ((dbid = rdbLoadLen(&rdb,NULL)) == REDIS_RDB_LENERR)

goto eoferr;

if (dbid >= (unsigned)server.dbnum) {

redisLog(REDIS_WARNING,"FATAL: Data file was created with a Redis server configured to handle more than %d databases. Exiting\n", server.dbnum);

exit(1);

}

db = server.db+dbid;

continue;

}

/* Read key */

// 获取key

if ((key = rdbLoadStringObject(&rdb)) == NULL) goto eoferr;

/* Read value */

// 获取value

if ((val = rdbLoadObject(type,&rdb)) == NULL) goto eoferr;

/* Check if the key already expired. This function is used when loading

* an RDB file from disk, either at startup, or when an RDB was

* received from the master. In the latter case, the master is

* responsible for key expiry. If we would expire keys here, the

* snapshot taken by the master may not be reflected on the slave. */

// 判断key是否超时

if (server.masterhost == NULL && expiretime != -1 && expiretime < now) {

decrRefCount(key);

decrRefCount(val);

continue;

}

/* Add the new object in the hash table */

// 如果没有超时,则插入数据库

dbAdd(db,key,val);

/* Set the expire time if needed */

// 设置key的超时时间

if (expiretime != -1) setExpire(db,key,expiretime);

// 释放key

decrRefCount(key);

}

/* Verify the checksum if RDB version is >= 5 */

// 读取文件的checksum,判断其rdb是否完整

if (rdbver >= 5 && server.rdb_checksum) {

uint64_t cksum, expected = rdb.cksum;

if (rioRead(&rdb,&cksum,8) == 0) goto eoferr;

memrev64ifbe(&cksum);

if (cksum == 0) {

redisLog(REDIS_WARNING,"RDB file was saved with checksum disabled: no check performed.");

} else if (cksum != expected) {

redisLog(REDIS_WARNING,"Wrong RDB checksum. Aborting now.");

exit(1);

}

}

fclose(fp);

stopLoading();

return REDIS_OK;

eoferr: /* unexpected end of file is handled here with a fatal exit */

redisLog(REDIS_WARNING,"Short read or OOM loading DB. Unrecoverable error, aborting now.");

exit(1);

return REDIS_ERR; /* Just to avoid warning */

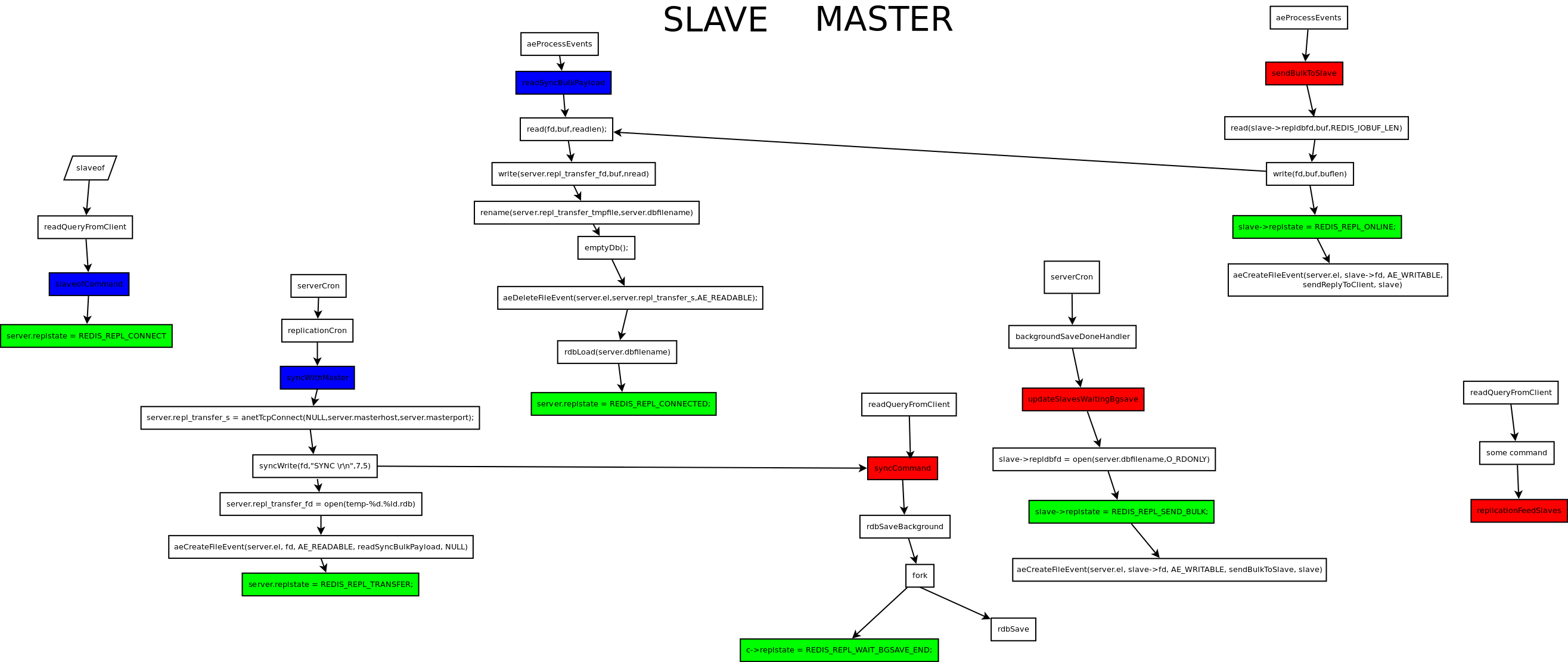

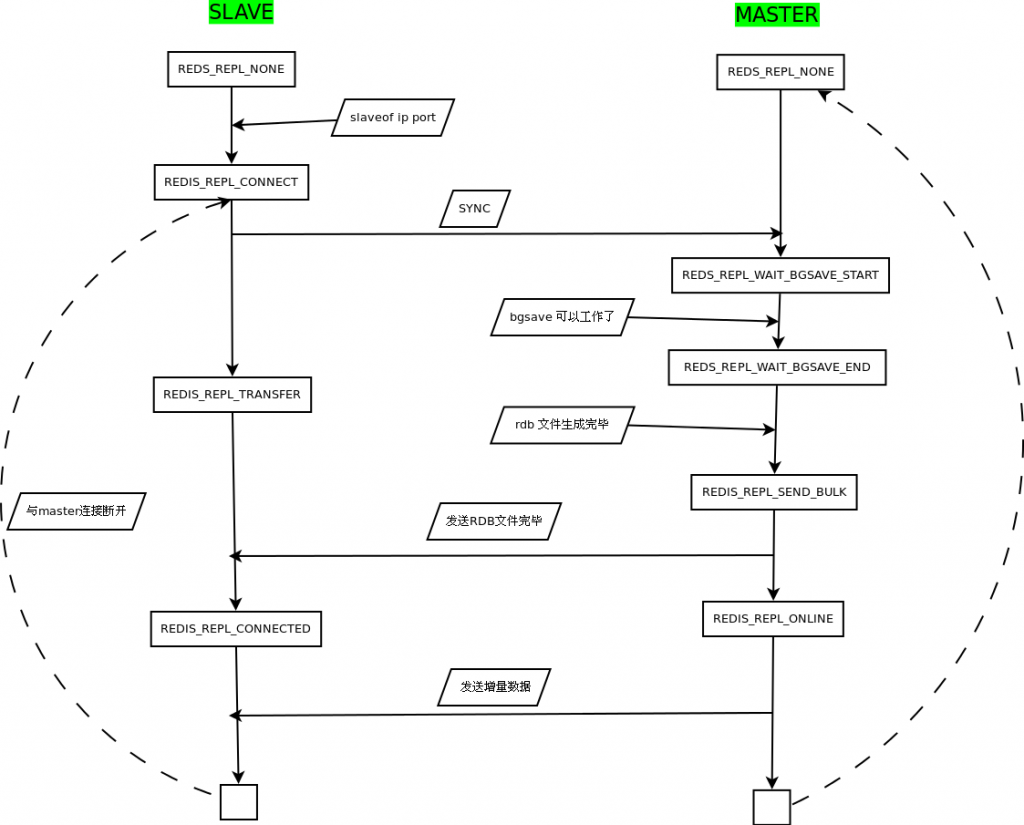

}正常运行的实例[master or slave],收到slaveof命令后更换master,启动slave模式。

先断绝与已有的master以及slaves之间的连接,并放弃收到的或者将要发出的增量同步数据,然后初始化相关配置,设置状态为REDISREPLCONNECT。

void slaveofCommand(redisClient *c) {

long port;

if ((getLongFromObjectOrReply(c, c->argv[2], &port, NULL) != REDIS_OK))

return;

/* Check if we are already attached to the specified slave */

// 如果已经attach到指定的master[host:port],则拒绝再次重连,返回ok

if (server.masterhost && !strcasecmp(server.masterhost,c->argv[1]->ptr)

&& server.masterport == port) {

redisLog(REDIS_NOTICE,"SLAVE OF would result into synchronization with the master we are already connected with. No operation performed.");

addReplySds(c,sdsnew("+OK Already connected to specified master\r\n"));

return;

}

/* There was no previous master or the user specified a different one,

* we can continue. */

// 连接指定的redis instance

replicationSetMaster(c->argv[1]->ptr, port);

redisLog(REDIS_NOTICE,"SLAVE OF %s:%d enabled (user request)",

addReply(c,shared.ok);

}

/* Set replication to the specified master address and port. */

void replicationSetMaster(char *ip, int port) {

sdsfree(server.masterhost);

server.masterhost = sdsnew(ip);

server.masterport = port;

if (server.master) freeClient(server.master); // 断开与旧的master之间的连接

disconnectAllBlockedClients(); /* Clients blocked in master, now slave. */ // 断开与所有client之间的连接

disconnectSlaves(); /* Force our slaves to resync with us as well. */ // 断开与所有slave之间的连接

replicationDiscardCachedMaster(); /* Don't try a PSYNC. */ // 放弃master发来的增量数据

freeReplicationBacklog(); /* Don't allow our chained slaves to PSYNC. */ // 不再把增量数据同步给slaves

cancelReplicationHandshake(); // 停止心跳

// 设置replication初始状态以及相关字段数据,同loadServerConfigFromString分析slaveof配置时设置相关字段的值一样

server.repl_state = REDIS_REPL_CONNECT;

server.master_repl_offset = 0;

server.repl_down_since = 0;

}redis的timer响应函数ServerCron每秒调用一次replication的周期函数replicationCron。这个函数检查到slave还没有成功连接master时,先进行连接动作。

连接动作由connectWithMaster完成。连接过程中会发出psync命令,尔后把状态更改为REDISREPLCONNECTING。

int serverCron(struct aeEventLoop *eventLoop, long long id, void *clientData) {

/* Replication cron function -- used to reconnect to master and

* to detect transfer failures. */

run_with_period(1000) replicationCron();

}

/* Replication cron function, called 1 time per second. */

void replicationCron(void) {

/* Check if we should connect to a MASTER */

if (server.repl_state == REDIS_REPL_CONNECT) {

redisLog(REDIS_NOTICE,"Connecting to MASTER %s:%d",

server.masterhost, server.masterport);

if (connectWithMaster() == REDIS_OK) {

redisLog(REDIS_NOTICE,"MASTER <-> SLAVE sync started");

}

}

}

int connectWithMaster(void) {

int fd;

fd = anetTcpNonBlockBindConnect(NULL,

server.masterhost,server.masterport,REDIS_BIND_ADDR);

if (fd == -1) {

redisLog(REDIS_WARNING,"Unable to connect to MASTER: %s",

strerror(errno));

return REDIS_ERR;

}

if (aeCreateFileEvent(server.el,fd,AE_READABLE|AE_WRITABLE,syncWithMaster,NULL) ==

AE_ERR)

{

close(fd);

redisLog(REDIS_WARNING,"Can't create readable event for SYNC");

return REDIS_ERR;

}

server.repl_transfer_lastio = server.unixtime;

server.repl_transfer_s = fd;

server.repl_state = REDIS_REPL_CONNECTING;

return REDIS_OK;

}如果处于REDISREPLCONNECTING or REDISREPLRECEIVEPONG的状态,而且距离上次接收数据时间已经超时,则断开与master之间的连接,把状态置为REDISREPL_CONNECT。

void replicationCron(void) {

/* Non blocking connection timeout? */

if (server.masterhost &&

(server.repl_state == REDIS_REPL_CONNECTING ||

server.repl_state == REDIS_REPL_RECEIVE_PONG) &&

(time(NULL)-server.repl_transfer_lastio) > server.repl_timeout)

{

redisLog(REDIS_WARNING,"Timeout connecting to the MASTER...");

undoConnectWithMaster();

}

}

void undoConnectWithMaster(void) {

int fd = server.repl_transfer_s;

redisAssert(server.repl_state == REDIS_REPL_CONNECTING ||

server.repl_state == REDIS_REPL_RECEIVE_PONG);

aeDeleteFileEvent(server.el,fd,AE_READABLE|AE_WRITABLE);

close(fd);

server.repl_transfer_s = -1;

server.repl_state = REDIS_REPL_CONNECT;

}正常的connect异步流程是:先connect,而后判断fd是否可写,最后再判断连接是否有误。而上面的连接过程中,connect成功后就直接发出了PSYNC命令,所以收到其reply函数syncWithMaster就意味着server.synctransfers确实可写。

syncWithMaster函数起始逻辑就是判断fd是否有error,这个是继续连接流程的第三步,如果没有error就可以确认连接可读可写而且没有error,此时就可以删除对可写事件的关注。

确定没有错误后再发出PING命令,状态更改为REDISREPLRECEIVE_PONG。

void syncWithMaster(aeEventLoop *el, int fd, void *privdata, int mask) {

char tmpfile[256], *err;

int dfd, maxtries = 5;

int sockerr = 0, psync_result;

socklen_t errlen = sizeof(sockerr);

REDIS_NOTUSED(el);

REDIS_NOTUSED(privdata);

REDIS_NOTUSED(mask);

/* If this event fired after the user turned the instance into a master

* with SLAVEOF NO ONE we must just return ASAP. */

// 如果收到了SLAVEOF NO ONE命令,则立即关闭与master之间的连接,并退出

if (server.repl_state == REDIS_REPL_NONE) {

close(fd);

return;

}

// 检查连接是否有问题

/* Check for errors in the socket. */

if (getsockopt(fd, SOL_SOCKET, SO_ERROR, &sockerr, &errlen) == -1)

sockerr = errno;

if (sockerr) {

aeDeleteFileEvent(server.el,fd,AE_READABLE|AE_WRITABLE);

redisLog(REDIS_WARNING,"Error condition on socket for SYNC: %s",

strerror(sockerr));

goto error;

}

/* If we were connecting, it's time to send a non blocking PING, we want to

* make sure the master is able to reply before going into the actual

* replication process where we have long timeouts in the order of

* seconds (in the meantime the slave would block). */

// 如果还在连接中而未确认连接已经成功,需要确认master能够对PING命令回复PONG,则需要以阻塞形式把PING命令发送出去

if (server.repl_state == REDIS_REPL_CONNECTING) {

redisLog(REDIS_NOTICE,"Non blocking connect for SYNC fired the event.");

/* Delete the writable event so that the readable event remains

* registered and we can wait for the PONG reply. */

// 删除写事件,只保留读事件,等地啊PONG响应

aeDeleteFileEvent(server.el,fd,AE_WRITABLE);

server.repl_state = REDIS_REPL_RECEIVE_PONG;

/* Send the PING, don't check for errors at all, we have the timeout

* that will take care about this. */

// 此处并不检查是否遇到error,如果超时后内容还没有发送出去,上面2.2小节与处理这个逻辑:超时处理

syncWrite(fd,"PING\r\n",6,100);

return;

}

}/* Wait for milliseconds until the given file descriptor becomes

* writable/readable/exception */

// 借用poll函数阻塞@milliseconds,然后判断@fd是否满足条件@mask

int aeWait(int fd, int mask, long long milliseconds) {

struct pollfd pfd;

int retmask = 0, retval;

memset(&pfd, 0, sizeof(pfd));

pfd.fd = fd;

if (mask & AE_READABLE) pfd.events |= POLLIN;

if (mask & AE_WRITABLE) pfd.events |= POLLOUT;

if ((retval = poll(&pfd, 1, milliseconds))== 1) {

if (pfd.revents & POLLIN) retmask |= AE_READABLE;

if (pfd.revents & POLLOUT) retmask |= AE_WRITABLE;

if (pfd.revents & POLLERR) retmask |= AE_WRITABLE;

if (pfd.revents & POLLHUP) retmask |= AE_WRITABLE;

return retmask;

} else {

return retval;

}

}

/* Redis performs most of the I/O in a nonblocking way, with the exception

* of the SYNC command where the slave does it in a blocking way, and

* the MIGRATE command that must be blocking in order to be atomic from the

* point of view of the two instances (one migrating the key and one receiving

* the key). This is why need the following blocking I/O functions.

*

* All the functions take the timeout in milliseconds. */

// redis执行大部分命令都是以异步方式运行,但sync和migrate任务除外。

// 因为migrate任务是执行数据同步工作,命令执行完就意味着两端的数据是一样的,所以须以同步方式执行

#define REDIS_SYNCIO_RESOLUTION 10 /* Resolution in milliseconds */

/* Write the specified payload to 'fd'. If writing the whole payload will be

* done within 'timeout' milliseconds the operation succeeds and 'size' is

* returned. Otherwise the operation fails, -1 is returned, and an unspecified

* partial write could be performed against the file descriptor. */

// 以阻塞形式把ptr[size]通过连接@fd发送出去

ssize_t syncWrite(int fd, char *ptr, ssize_t size, long long timeout) {

ssize_t nwritten, ret = size;

long long start = mstime();

long long remaining = timeout;

while(1) {

// 修正等待时间为10ms,因为linux给每个进程分配的时间片长度是10ms

long long wait = (remaining > REDIS_SYNCIO_RESOLUTION) ?

remaining : REDIS_SYNCIO_RESOLUTION;

long long elapsed;

/* Optimistically try to write before checking if the file descriptor

* is actually writable. At worst we get EAGAIN. */

nwritten = write(fd,ptr,size);

if (nwritten == -1) {

if (errno != EAGAIN) return -1;

} else {

ptr += nwritten;

size -= nwritten;

}

if (size == 0) return ret;

/* Wait */

aeWait(fd,AE_WRITABLE,wait);

elapsed = mstime() - start;

if (elapsed >= timeout) {

errno = ETIMEDOUT;

return -1;

}

remaining = timeout - elapsed;

}

}再次收到对PSYNC命令的响应,就是收到PONG响应。如果需要进行密码验证,就进行发送密码进行验证,注意发送的密码就是slave自己的密码。

而后通过AUTH & REPLCONF命令发送密码验证和自己的listenning port后,先尝试进行增量同步。这一步其实涉及到 redis 2.8版本以前的一个bug:如果master和slave之间正在执行数据同步的时候网络闪断,那么连接重新建立以后每次都要重新全量的接收数据!所以redis 2.8以后的版本就有了这个patch。

函数的流程为:

void syncWithMaster(aeEventLoop *el, int fd, void *privdata, int mask) {

/* Receive the PONG command. */

if (server.repl_state == REDIS_REPL_RECEIVE_PONG) {

char buf[1024];

/* Delete the readable event, we no longer need it now that there is

* the PING reply to read. */

aeDeleteFileEvent(server.el,fd,AE_READABLE);

/* Read the reply with explicit timeout. */

buf[0] = '\0';

// syncReadLine是以阻塞地方式读取回复,同syncWrite

if (syncReadLine(fd,buf,sizeof(buf),

server.repl_syncio_timeout*1000) == -1)

{

redisLog(REDIS_WARNING,

"I/O error reading PING reply from master: %s",

strerror(errno));

goto error;

}

/* We accept only two replies as valid, a positive +PONG reply

* (we just check for "+") or an authentication error.

* Note that older versions of Redis replied with "operation not

* permitted" instead of using a proper error code, so we test

* both. */

// 检查回复+PONG内容,处理除却noauth之类的其他错误

if (buf[0] != '+' && // 回复内容不是"+PONG",这里只比较第一个字节

strncmp(buf,"-NOAUTH",7) != 0 &&

strncmp(buf,"-ERR operation not permitted",28) != 0)

{

redisLog(REDIS_WARNING,"Error reply to PING from master: '%s'",buf);

goto error;

} else {

redisLog(REDIS_NOTICE,

"Master replied to PING, replication can continue...");

}

}

/* AUTH with the master if required. */

if(server.masterauth) {

err = sendSynchronousCommand(fd,"AUTH",server.masterauth,NULL);

if (err[0] == '-') {

redisLog(REDIS_WARNING,"Unable to AUTH to MASTER: %s",err);

sdsfree(err);

goto error;

}

sdsfree(err);

}

/* Set the slave port, so that Master's INFO command can list the

* slave listening port correctly. */

// 向server汇报自己的接收数据的端口

{

sds port = sdsfromlonglong(server.port);

err = sendSynchronousCommand(fd,"REPLCONF","listening-port",port,

NULL);

sdsfree(port);

/* Ignore the error if any, not all the Redis versions support

* REPLCONF listening-port. */

if (err[0] == '-') {

redisLog(REDIS_NOTICE,"(Non critical) Master does not understand REPLCONF listening-port: %s", err);

}

sdsfree(err);

}

/* Try a partial resynchonization. If we don't have a cached master

* slaveTryPartialResynchronization() will at least try to use PSYNC

* to start a full resynchronization so that we get the master run id

* and the global offset, to try a partial resync at the next

* reconnection attempt. */

// 尝试增量同步

psync_result = slaveTryPartialResynchronization(fd);

if (psync_result == PSYNC_CONTINUE) {

redisLog(REDIS_NOTICE, "MASTER <-> SLAVE sync: Master accepted a Partial Resynchronization.");

return;

}

/* Fall back to SYNC if needed. Otherwise psync_result == PSYNC_FULLRESYNC

* and the server.repl_master_runid and repl_master_initial_offset are

* already populated. */

// 如果不支持增量同步,那么发送SYNC命令,准备进行全量同步

if (psync_result == PSYNC_NOT_SUPPORTED) {

redisLog(REDIS_NOTICE,"Retrying with SYNC...");

if (syncWrite(fd,"SYNC\r\n",6,server.repl_syncio_timeout*1000) == -1) {

redisLog(REDIS_WARNING,"I/O error writing to MASTER: %s",

strerror(errno));

goto error;

}

}

/* Prepare a suitable temp file for bulk transfer */

// 创建一个临时文件,用于接收全量数据

while(maxtries--) {

snprintf(tmpfile,256,

"temp-%d.%ld.rdb",(int)server.unixtime,(long int)getpid());

dfd = open(tmpfile,O_CREAT|O_WRONLY|O_EXCL,0644);

if (dfd != -1) break;

sleep(1);

}

if (dfd == -1) {

redisLog(REDIS_WARNING,"Opening the temp file needed for MASTER <-> SLAVE synchronization: %s",strerror(errno));

goto error;

}

/* Setup the non blocking download of the bulk file. */

// 注册readSyncBulkPayload,以用于接收全量数据

if (aeCreateFileEvent(server.el,fd, AE_READABLE,readSyncBulkPayload,NULL)

== AE_ERR)

{

redisLog(REDIS_WARNING,

"Can't create readable event for SYNC: %s (fd=%d)",

strerror(errno),fd);

goto error;

}

server.repl_state = REDIS_REPL_TRANSFER;

server.repl_transfer_size = -1;

server.repl_transfer_read = 0;

server.repl_transfer_last_fsync_off = 0;

server.repl_transfer_fd = dfd;

server.repl_transfer_lastio = server.unixtime;

server.repl_transfer_tmpfile = zstrdup(tmpfile);

return;

error:

close(fd);

server.repl_transfer_s = -1;

server.repl_state = REDIS_REPL_CONNECT;

return;

}/* Send a synchronous command to the master. Used to send AUTH and

* REPLCONF commands before starting the replication with SYNC.

*

* The command returns an sds string representing the result of the

* operation. On error the first byte is a "-".

*/

char *sendSynchronousCommand(int fd, ...) {

va_list ap;

sds cmd = sdsempty();

char *arg, buf[256];

/* Create the command to send to the master, we use simple inline

* protocol for simplicity as currently we only send simple strings. */

va_start(ap,fd);

while(1) {

arg = va_arg(ap, char*);

if (arg == NULL) break;

if (sdslen(cmd) != 0) cmd = sdscatlen(cmd," ",1);

cmd = sdscat(cmd,arg);

}

cmd = sdscatlen(cmd,"\r\n",2);

/* Transfer command to the server. */

if (syncWrite(fd,cmd,sdslen(cmd),server.repl_syncio_timeout*1000) == -1) {

sdsfree(cmd);

return sdscatprintf(sdsempty(),"-Writing to master: %s",

strerror(errno));

}

sdsfree(cmd);

/* Read the reply from the server. */

if (syncReadLine(fd,buf,sizeof(buf),server.repl_syncio_timeout*1000) == -1)

{

return sdscatprintf(sdsempty(),"-Reading from master: %s",

strerror(errno));

}

return sdsnew(buf);

}有增量同步特性的主服务器为被发送的复制流创建一个内存缓冲区(in-memory backlog), 并且主服务器和所有从服务器之间都记录一个复制偏移量(replication offset)和一个主服务器 ID (master run id),当出现闪断但是slave又重新连接成功后,如果:

满足以上两个条件,那么主服务器会向从服务器发送断线时缺失的那部分数据。否则的话,从服务器就要执执行全量同步操作。

//master拒绝增量同步,释放与master之间的连接

void replicationDiscardCachedMaster(void) {

if (server.cached_master == NULL) return;

redisLog(REDIS_NOTICE,"Discarding previously cached master state.");

server.cached_master->flags &= ~REDIS_MASTER;

freeClient(server.cached_master);

server.cached_master = NULL;

}

/* Turn the cached master into the current master, using the file descriptor

* passed as argument as the socket for the new master.

*

* This function is called when successfully setup a partial resynchronization

* so the stream of data that we'll receive will start from were this

* master left. */

// 如果master答应增量同步,则把state更改为REDIS_REPL_CONNECTED,然后注册增量同步回调函数readQueryFromClient

void replicationResurrectCachedMaster(int newfd) {

server.master = server.cached_master;

server.cached_master = NULL;

server.master->fd = newfd;

server.master->flags &= ~(REDIS_CLOSE_AFTER_REPLY|REDIS_CLOSE_ASAP);

server.master->authenticated = 1;

server.master->lastinteraction = server.unixtime;

server.repl_state = REDIS_REPL_CONNECTED;

/* Re-add to the list of clients. */

// 把master作为新的client放在client链表尾部,然后注册增量同步回调函数readQueryFromClient

listAddNodeTail(server.clients,server.master);

if (aeCreateFileEvent(server.el, newfd, AE_READABLE,

readQueryFromClient, server.master)) {

redisLog(REDIS_WARNING,"Error resurrecting the cached master, impossible to add the readable handler: %s", strerror(errno));

// 注册失败,就尽快关闭与master之间的连接

freeClientAsync(server.master); /* Close ASAP. */

}

/* We may also need to install the write handler as well if there is

* pending data in the write buffers. */

// 如果还有待reply的数据没有发送出去,就注册reply函数sendReplyToClient

if (server.master->bufpos || listLength(server.master->reply)) {

if (aeCreateFileEvent(server.el, newfd, AE_WRITABLE,

sendReplyToClient, server.master)) {

redisLog(REDIS_WARNING,"Error resurrecting the cached master, impossible to add the writable handler: %s", strerror(errno));

freeClientAsync(server.master); /* Close ASAP. */

}

}

}

/*

* 这个函数用于应对与master之间的增量同步。如果没有cached_master,则PSYNC的参

* 数可以设置为"-1",至少可以全量同步所需要的两个参数:master的server id和

* offset。这个函数用来被函数syncWithMaster调用,所以应满足下面两个条件:

*

* 1) master和slave之间的连接已经建立起来。

* 2) 这个函数不会close掉这个连接,接下来的增量同步还会使用到它。

*

* 函数的返回值:

*

* PSYNC_CONTINUE: 准备好进行增量同步,此时可以通过

* replicationResurrectCachedMaster函数保存

* 与master之间的连接

* PSYNC_FULLRESYNC: master虽然支持增量同步,但是与slave之前并没有进行过数

* 据同步,二者之间应该进行全量数据同步,master会把master

* run_id和全局复制offset告知slave

* PSYNC_NOT_SUPPORTED: master不支持PSYNC命令

*/

#define PSYNC_CONTINUE 0

#define PSYNC_FULLRESYNC 1

#define PSYNC_NOT_SUPPORTED 2

int slaveTryPartialResynchronization(int fd) {

char *psync_runid;

char psync_offset[32];

sds reply;

/* Initially set repl_master_initial_offset to -1 to mark the current

* master run_id and offset as not valid. Later if we'll be able to do

* a FULL resync using the PSYNC command we'll set the offset at the

* right value, so that this information will be propagated to the

* client structure representing the master into server.master. */

// 把repl_master_initial_offset赋值为-1,以说明master run_id和offset无效

server.repl_master_initial_offset = -1;

// 把自己记录的server runid和offset发送给master

if (server.cached_master) {

psync_runid = server.cached_master->replrunid;

snprintf(psync_offset,sizeof(psync_offset),"%lld", server.cached_master->reploff+1);

redisLog(REDIS_NOTICE,"Trying a partial resynchronization (request %s:%s).", psync_runid, psync_offset);

} else {

redisLog(REDIS_NOTICE,"Partial resynchronization not possible (no cached master)");

psync_runid = "?";

memcpy(psync_offset,"-1",3);

}

/* Issue the PSYNC command */

// 以同步方式发出PSYNC命令以及其参数runid & offset,并获取reply

reply = sendSynchronousCommand(fd,"PSYNC",psync_runid,psync_offset,NULL);

// 如果回复是FULLRESYNC,则分析回复的runid & offset

if (!strncmp(reply,"+FULLRESYNC",11)) {

char *runid = NULL, *offset = NULL;

/* FULL RESYNC, parse the reply in order to extract the run id

* and the replication offset. */

runid = strchr(reply,' ');

if (runid) {

runid++;

offset = strchr(runid,' ');

if (offset) offset++;

}

if (!runid || !offset || (offset-runid-1) != REDIS_RUN_ID_SIZE) {

redisLog(REDIS_WARNING,

"Master replied with wrong +FULLRESYNC syntax.");

/* This is an unexpected condition, actually the +FULLRESYNC

* reply means that the master supports PSYNC, but the reply

* format seems wrong. To stay safe we blank the master

* runid to make sure next PSYNCs will fail. */

// master是支持psync的,但是由于发送给master的runid & offset不正确,所以为了数据完整性还是进行全量同步为妥

memset(server.repl_master_runid,0,REDIS_RUN_ID_SIZE+1);

} else {

// 记录全量同步其实参数runid & offset

memcpy(server.repl_master_runid, runid, offset-runid-1); // offset 和 runid分别是reply字符串起始处的指针

server.repl_master_runid[REDIS_RUN_ID_SIZE] = '\0';

server.repl_master_initial_offset = strtoll(offset,NULL,10);

redisLog(REDIS_NOTICE,"Full resync from master: %s:%lld",

server.repl_master_runid,

server.repl_master_initial_offset);

}

/* We are going to full resync, discard the cached master structure. */

// 释放master与client之间的连接,开始进行全量同步

replicationDiscardCachedMaster();

sdsfree(reply);

return PSYNC_FULLRESYNC;

}

// master答应增量同步

if (!strncmp(reply,"+CONTINUE",9)) {

/* Partial resync was accepted, set the replication state accordingly */

redisLog(REDIS_NOTICE,

"Successful partial resynchronization with master.");

sdsfree(reply);

replicationResurrectCachedMaster(fd);

return PSYNC_CONTINUE;

}

/* If we reach this point we receied either an error since the master does

* not understand PSYNC, or an unexpected reply from the master.

* Return PSYNC_NOT_SUPPORTED to the caller in both cases. */

// master不支持增量同步或者发送了其他错误

if (strncmp(reply,"-ERR",4)) {

/* If it's not an error, log the unexpected event. */

redisLog(REDIS_WARNING,

"Unexpected reply to PSYNC from master: %s", reply);

} else {

redisLog(REDIS_NOTICE,

"Master does not support PSYNC or is in "

"error state (reply: %s)", reply);

}

sdsfree(reply);

replicationDiscardCachedMaster();

return PSYNC_NOT_SUPPORTED;

}slave启动之后,刚开始进行的数据同步只能以全量的方式进行,尔后才有增量同步的可能。所以先分析全量同步的流程。

全量同步函数流程:

// 向disk同步数据的时候,如果是linux系统则采用其特有的函数rdb_fsync_range

#ifdef HAVE_SYNC_FILE_RANGE

#define rdb_fsync_range(fd,off,size) sync_file_range(fd,off,size,SYNC_FILE_RANGE_WAIT_BEFORE|SYNC_FILE_RANGE_WRITE)

#else

#define rdb_fsync_range(fd,off,size) fsync(fd)

#endif

/* Asynchronously read the SYNC payload we receive from a master */

// 如果slave从master同步过来的数据超过8M,就要进行fsync

#define REPL_MAX_WRITTEN_BEFORE_FSYNC (1024*1024*8) /* 8 MB */

void readSyncBulkPayload(aeEventLoop *el, int fd, void *privdata, int mask) {

char buf[4096];

ssize_t nread, readlen;

off_t left;

REDIS_NOTUSED(el);

REDIS_NOTUSED(privdata);

REDIS_NOTUSED(mask);

/* Static vars used to hold the EOF mark, and the last bytes received

* form the server: when they match, we reached the end of the transfer. */

// 如果无法获取到bulk的长度,则master会给出数据末尾的标志符集,存于eofmark

static char eofmark[REDIS_RUN_ID_SIZE];

static char lastbytes[REDIS_RUN_ID_SIZE];

// 用于说明是否已经精确地获取到了数据的长度[1:否;0:是]

// 注意:无法精确知道数据长度的模式可称之为模糊模式

static int usemark = 0;

// 获取数据块的长度

/* If repl_transfer_size == -1 we still have to read the bulk length

* from the master reply. */

// repl_transfer_size值初始为-1,见函数syncWithMaster。

// 如果repl_transfer_size为-1,说明刚开始读取master回复

if (server.repl_transfer_size == -1) {

// 以同步的方式读取数据,超时时间为REDIS_REPL_SYNCIO_TIMEOUT(5s)

if (syncReadLine(fd,buf,1024,server.repl_syncio_timeout*1000) == -1) {

redisLog(REDIS_WARNING,

"I/O error reading bulk count from MASTER: %s",

strerror(errno));

goto error;

}

if (buf[0] == '-') {

redisLog(REDIS_WARNING,

"MASTER aborted replication with an error: %s",

buf+1);

goto error;

} else if (buf[0] == '\0') {

/* At this stage just a newline works as a PING in order to take

* the connection live. So we refresh our last interaction

* timestamp. */

// 收到的内容为空,则master仅仅是为了连接有效

server.repl_transfer_lastio = server.unixtime;

return;

} else if (buf[0] != '$') {

redisLog(REDIS_WARNING,"Bad protocol from MASTER, the first byte is not '$' (we received '%s'), are you sure the host and port are right?", buf);

goto error;

}

// 读取长度数值

/* There are two possible forms for the bulk payload. One is the

* usual $<count> bulk format. The other is used for diskless transfers

* when the master does not know beforehand the size of the file to

* transfer. In the latter case, the following format is used:

*

* $EOF:<40 bytes delimiter>

*

* At the end of the file the announced delimiter is transmitted. The

* delimiter is long and random enough that the probability of a

* collision with the actual file content can be ignored. */

// 可能收到两种形式的回复。一种是$<count>,指明了数据长度。另一种则是

// $EOF:<40 bytes>,这种情况是master没有启动磁盘存储,它无法计算要传输的Bulk的值

if (strncmp(buf+1,"EOF:",4) == 0 && strlen(buf+5) >= REDIS_RUN_ID_SIZE) {

usemark = 1;

memcpy(eofmark,buf+5,REDIS_RUN_ID_SIZE);

memset(lastbytes,0,REDIS_RUN_ID_SIZE);

/* Set any repl_transfer_size to avoid entering this code path

* at the next call. */

// 把值设为0,以避免在进入这个分支

server.repl_transfer_size = 0;

redisLog(REDIS_NOTICE,

"MASTER <-> SLAVE sync: receiving streamed RDB from master");

} else {

usemark = 0;

server.repl_transfer_size = strtol(buf+1,NULL,10);

redisLog(REDIS_NOTICE,

"MASTER <-> SLAVE sync: receiving %lld bytes from master",

(long long) server.repl_transfer_size);

}

return;

}

/* Read bulk data */

if (usemark) {

// 模糊模式下不知道到底该读多长,就以buf长度为限

readlen = sizeof(buf);

} else {

left = server.repl_transfer_size - server.repl_transfer_read;

// 判断left与readlen的关系,如果超过buf的长度就取buf长度为上限

readlen = (left < (signed)sizeof(buf)) ? left : (signed)sizeof(buf);

}

nread = read(fd,buf,readlen);

if (nread <= 0) {

redisLog(REDIS_WARNING,"I/O error trying to sync with MASTER: %s",

(nread == -1) ? strerror(errno) : "connection lost");

replicationAbortSyncTransfer();

return;

}

server.stat_net_input_bytes += nread;

// 判断模糊模式下是否读取到了数据末尾

/* When a mark is used, we want to detect EOF asap in order to avoid

* writing the EOF mark into the file... */

int eof_reached = 0;

if (usemark) {

/* Update the last bytes array, and check if it matches our delimiter.*/

if (nread >= REDIS_RUN_ID_SIZE) {

memcpy(lastbytes,buf+nread-REDIS_RUN_ID_SIZE,REDIS_RUN_ID_SIZE);

} else {

int rem = REDIS_RUN_ID_SIZE-nread;

memmove(lastbytes,lastbytes+nread,rem);

memcpy(lastbytes+rem,buf,nread);

}

if (memcmp(lastbytes,eofmark,REDIS_RUN_ID_SIZE) == 0) eof_reached = 1;

}

// 把收到的数据写到disk上

server.repl_transfer_lastio = server.unixtime;

if (write(server.repl_transfer_fd,buf,nread) != nread) {

redisLog(REDIS_WARNING,"Write error or short write writing to the DB dump file needed for MASTER <-> SLAVE synchronization: %s", strerror(errno));

goto error;

}

server.repl_transfer_read += nread;

// 模糊模式下如果数据读取完毕,则删除最后的40B

/* Delete the last 40 bytes from the file if we reached EOF. */

if (usemark && eof_reached) {

if (ftruncate(server.repl_transfer_fd,

server.repl_transfer_read - REDIS_RUN_ID_SIZE) == -1)

{

redisLog(REDIS_WARNING,"Error truncating the RDB file received from the master for SYNC: %s", strerror(errno));

goto error;

}

}

// 把数据同步到磁盘,以免造成数据堆积

/* Sync data on disk from time to time, otherwise at the end of the transfer

* we may suffer a big delay as the memory buffers are copied into the

* actual disk. */

if (server.repl_transfer_read >=

server.repl_transfer_last_fsync_off + REPL_MAX_WRITTEN_BEFORE_FSYNC)

{

off_t sync_size = server.repl_transfer_read -

server.repl_transfer_last_fsync_off;

rdb_fsync_range(server.repl_transfer_fd,

server.repl_transfer_last_fsync_off, sync_size);

server.repl_transfer_last_fsync_off += sync_size;

}

/* Check if the transfer is now complete */

if (!usemark) {

if (server.repl_transfer_read == server.repl_transfer_size)

eof_reached = 1;

}

if (eof_reached) {

// 把临时文件rename为rdb文件

if (rename(server.repl_transfer_tmpfile,server.rdb_filename) == -1) {

redisLog(REDIS_WARNING,"Failed trying to rename the temp DB into dump.rdb in MASTER <-> SLAVE synchronization: %s", strerror(errno));

replicationAbortSyncTransfer();

return;

}

redisLog(REDIS_NOTICE, "MASTER <-> SLAVE sync: Flushing old data");

signalFlushedDb(-1);

// load到内存之前,先把内存数据清空

emptyDb(replicationEmptyDbCallback);

/* Before loading the DB into memory we need to delete the readable

* handler, otherwise it will get called recursively since

* rdbLoad() will call the event loop to process events from time to

* time for non blocking loading. */

// 在把数据load从磁盘load到内存之前,暂时不再从master读取数据

aeDeleteFileEvent(server.el,server.repl_transfer_s,AE_READABLE);

redisLog(REDIS_NOTICE, "MASTER <-> SLAVE sync: Loading DB in memory");

if (rdbLoad(server.rdb_filename) != REDIS_OK) {

redisLog(REDIS_WARNING,"Failed trying to load the MASTER synchronization DB from disk");

replicationAbortSyncTransfer();

return;

}

// 把replication状态修改为CONNECTED

/* Final setup of the connected slave <- master link */

zfree(server.repl_transfer_tmpfile);

close(server.repl_transfer_fd);

server.master = createClient(server.repl_transfer_s);

server.master->flags |= REDIS_MASTER;

server.master->authenticated = 1;

server.repl_state = REDIS_REPL_CONNECTED;

// 在slaveTryPartialResynchronization中可以获取下面两个值

server.master->reploff = server.repl_master_initial_offset;

memcpy(server.master->replrunid, server.repl_master_runid,

sizeof(server.repl_master_runid));

/* If master offset is set to -1, this master is old and is not

* PSYNC capable, so we flag it accordingly. */

if (server.master->reploff == -1)

server.master->flags |= REDIS_PRE_PSYNC;

redisLog(REDIS_NOTICE, "MASTER <-> SLAVE sync: Finished with success");

/* Restart the AOF subsystem now that we finished the sync. This

* will trigger an AOF rewrite, and when done will start appending

* to the new file. */

if (server.aof_state != REDIS_AOF_OFF) {

int retry = 10;

stopAppendOnly();

while (retry-- && startAppendOnly() == REDIS_ERR) {

redisLog(REDIS_WARNING,"Failed enabling the AOF after successful master synchronization! Trying it again in one second.");

sleep(1);

}

if (!retry) {

redisLog(REDIS_WARNING,"FATAL: this slave instance finished the synchronization with its master, but the AOF can't be turned on. Exiting now.");

exit(1);

}

}

}

return;

error:

replicationAbortSyncTransfer();

return;

}void readQueryFromClient(aeEventLoop *el, int fd, void *privdata, int mask) {

redisClient *c = (redisClient*) privdata;

int nread, readlen;

size_t qblen;

REDIS_NOTUSED(el);

REDIS_NOTUSED(mask);

server.current_client = c;

readlen = REDIS_IOBUF_LEN;

/* If this is a multi bulk request, and we are processing a bulk reply

* that is large enough, try to maximize the probability that the query

* buffer contains exactly the SDS string representing the object, even

* at the risk of requiring more read(2) calls. This way the function

* processMultiBulkBuffer() can avoid copying buffers to create the

* Redis Object representing the argument. */

if (c->reqtype == REDIS_REQ_MULTIBULK && c->multibulklen && c->bulklen != -1

&& c->bulklen >= REDIS_MBULK_BIG_ARG)

{

int remaining = (unsigned)(c->bulklen+2)-sdslen(c->querybuf);

if (remaining < readlen) readlen = remaining;

}

// 读取reply data

qblen = sdslen(c->querybuf);

if (c->querybuf_peak < qblen) c->querybuf_peak = qblen;

c->querybuf = sdsMakeRoomFor(c->querybuf, readlen);

nread = read(fd, c->querybuf+qblen, readlen);

if (nread == -1) {

if (errno == EAGAIN) {

nread = 0;

} else {

redisLog(REDIS_VERBOSE, "Reading from client: %s",strerror(errno));

freeClient(c);

return;

}

} else if (nread == 0) {

redisLog(REDIS_VERBOSE, "Client closed connection");

freeClient(c);

return;

}

if (nread) {

sdsIncrLen(c->querybuf,nread);

c->lastinteraction = server.unixtime;

if (c->flags & REDIS_MASTER) c->reploff += nread;

server.stat_net_input_bytes += nread;

} else {

server.current_client = NULL;

return;

}

if (sdslen(c->querybuf) > server.client_max_querybuf_len) {

sds ci = catClientInfoString(sdsempty(),c), bytes = sdsempty();

bytes = sdscatrepr(bytes,c->querybuf,64);

redisLog(REDIS_WARNING,"Closing client that reached max query buffer length: %s (qbuf initial bytes: %s)", ci, bytes);

sdsfree(ci);

sdsfree(bytes);

freeClient(c);

return;

}

// 像处理client请求那样处理收到的数据

processInputBuffer(c);

server.current_client = NULL;

}server.master代表slave与master之间的连接句柄,当这个连接超时后连接会被关闭,但是句柄这个连接所用到的内存资源会被赋值给server.cachedmaster。待需要重新与master建立连接的时候,server.master只需要从server.cachedmaster处获取到这个句柄就可以了。

通过二者实现了slave与master之间连接句柄的循环利用。cached_master可以认为是一个“迷你型”的资源回收池。

slave在与master进行连接并同步数据的过程中修改相关的状态,待全量同步完成,会调用createClient,并把状态修改为CONNECTED.

相关的代码可以到2.5 全量同步一节参考函数readSyncBulkPayload。

slave每次与master之间有通信时,server.master->lastinteraction都会被更新。

void replicationCron(void) {

/* Timed out master when we are an already connected slave? */

// 如果超过REDIS_REPL_TIMEOUT(60s)与master之间没有通信,则关闭与master之间的连接

if (server.masterhost && server.repl_state == REDIS_REPL_CONNECTED &&

(time(NULL)-server.master->lastinteraction) > server.repl_timeout)

{

redisLog(REDIS_WARNING,"MASTER timeout: no data nor PING received...");

freeClient(server.master);

}

}

void freeClient(redisClient *c) {

/* If it is our master that's beging disconnected we should make sure

* to cache the state to try a partial resynchronization later.

*

* Note that before doing this we make sure that the client is not in

* some unexpected state, by checking its flags. */

if (server.master && c->flags & REDIS_MASTER) {

redisLog(REDIS_WARNING,"Connection with master lost.");

if (!(c->flags & (REDIS_CLOSE_AFTER_REPLY|

REDIS_CLOSE_ASAP|

REDIS_BLOCKED|

REDIS_UNBLOCKED)))

{

replicationCacheMaster(c);

return;

}

}

}

/*

* 这个函数会被freeClient调用,以把与master之间的连接缓存起来。

*

* 另外两个函数会分别在不同的状况下处理cached_master:

*

* 1 如果以后不会再用与master之间的连接,replicationDiscardCachedMaster()

* 会释放掉这个链接;

* 2 如果发出了PSYNC命令,replicationResurrectCachedMaster()则会重新激活cached_master

*/

void replicationCacheMaster(redisClient *c) {

listNode *ln;

redisAssert(server.master != NULL && server.cached_master == NULL);

redisLog(REDIS_NOTICE,"Caching the disconnected master state.");

/* Remove from the list of clients, we don't want this client to be

* listed by CLIENT LIST or processed in any way by batch operations. */

// 把连接从server.clients这个list中删除掉,以免客户端用CLIENT LIST命令获取到这个连接

ln = listSearchKey(server.clients,c);

redisAssert(ln != NULL);

listDelNode(server.clients,ln);

/* Save the master. Server.master will be set to null later by

* replicationHandleMasterDisconnection(). */

// 放入缓存池,在函数replicationHandleMasterDisconnection()里master会被置为nil

server.cached_master = server.master;

/* Remove the event handlers and close the socket. We'll later reuse

* the socket of the new connection with the master during PSYNC. */

// 删除掉与连接相关的读写事件,并close掉连接

aeDeleteFileEvent(server.el,c->fd,AE_READABLE);

aeDeleteFileEvent(server.el,c->fd,AE_WRITABLE);

close(c->fd);

/* Set fd to -1 so that we can safely call freeClient(c) later. */

c->fd = -1;

/* Invalidate the Peer ID cache. */

if (c->peerid) {

sdsfree(c->peerid);

c->peerid = NULL;

}

/* Caching the master happens instead of the actual freeClient() call,

* so make sure to adjust the replication state. This function will

* also set server.master to NULL. */

// 把master置为nil,并置state为REDIS_REPL_CONNECT

replicationHandleMasterDisconnection();

}

/* This function is called when the slave lose the connection with the

* master into an unexpected way. */

void replicationHandleMasterDisconnection(void) {

server.master = NULL;

server.repl_state = REDIS_REPL_CONNECT;

server.repl_down_since = server.unixtime;

/* We lost connection with our master, force our slaves to resync

* with us as well to load the new data set.

*

* If server.masterhost is NULL the user called SLAVEOF NO ONE so

* slave resync is not needed. */

if (server.masterhost != NULL) disconnectSlaves();

}

/* Close all the slaves connections. This is useful in chained replication

* when we resync with our own master and want to force all our slaves to

* resync with us as well. */

void disconnectSlaves(void) {

while (listLength(server.slaves)) {

listNode *ln = listFirst(server.slaves);

freeClient((redisClient*)ln->value);

}

}当slave与master之间进行增量同步的时候,会激活server.cached_master。具体流程请参考 /** 2.4.2 增量同步尝试 **/ 一节的函数replicationResurrectCachedMaster()。

void readQueryFromClient(aeEventLoop *el, int fd, void *privdata, int mask) {

redisClient *c = (redisClient*) privdata;

int nread, readlen;

size_t qblen;

REDIS_NOTUSED(el);

REDIS_NOTUSED(mask);

server.current_client = c;

readlen = REDIS_IOBUF_LEN;

/* If this is a multi bulk request, and we are processing a bulk reply

* that is large enough, try to maximize the probability that the query

* buffer contains exactly the SDS string representing the object, even

* at the risk of requiring more read(2) calls. This way the function

* processMultiBulkBuffer() can avoid copying buffers to create the

* Redis Object representing the argument. */

if (c->reqtype == REDIS_REQ_MULTIBULK && c->multibulklen && c->bulklen != -1

&& c->bulklen >= REDIS_MBULK_BIG_ARG)

{

int remaining = (unsigned)(c->bulklen+2)-sdslen(c->querybuf);

if (remaining < readlen) readlen = remaining;

}

// 创建buffer,并读取请求数据

qblen = sdslen(c->querybuf);

if (c->querybuf_peak < qblen) c->querybuf_peak = qblen;

c->querybuf = sdsMakeRoomFor(c->querybuf, readlen);

nread = read(fd, c->querybuf+qblen, readlen);

if (nread == -1) {

if (errno == EAGAIN) {

nread = 0;

} else {

redisLog(REDIS_VERBOSE, "Reading from client: %s",strerror(errno));

freeClient(c);

return;

}

} else if (nread == 0) {

redisLog(REDIS_VERBOSE, "Client closed connection");

freeClient(c);

return;

}

if (nread) {

sdsIncrLen(c->querybuf,nread);

c->lastinteraction = server.unixtime;

if (c->flags & REDIS_MASTER) c->reploff += nread;

server.stat_net_input_bytes += nread;

} else {

server.current_client = NULL;

return;

}

if (sdslen(c->querybuf) > server.client_max_querybuf_len) {

sds ci = catClientInfoString(sdsempty(),c), bytes = sdsempty();

bytes = sdscatrepr(bytes,c->querybuf,64);

redisLog(REDIS_WARNING,"Closing client that reached max query buffer length: %s (qbuf initial bytes: %s)", ci, bytes);

sdsfree(ci);

sdsfree(bytes);

freeClient(c);

return;

}

// 处理收到的buffer

processInputBuffer(c);

server.current_client = NULL;

}void processInputBuffer(redisClient *c) {

// 循环处理收到的一批命令

/* Keep processing while there is something in the input buffer */

while(sdslen(c->querybuf)) {

/* Determine request type when unknown. */

if (!c->reqtype) {

if (c->querybuf[0] == '*') {

c->reqtype = REDIS_REQ_MULTIBULK;

} else {

c->reqtype = REDIS_REQ_INLINE;

}

}

if (c->reqtype == REDIS_REQ_INLINE) {

if (processInlineBuffer(c) != REDIS_OK) break;

} else if (c->reqtype == REDIS_REQ_MULTIBULK) {

if (processMultibulkBuffer(c) != REDIS_OK) break;

} else {

redisPanic("Unknown request type");

}

/* Multibulk processing could see a <= 0 length. */

if (c->argc == 0) {

resetClient(c);

} else {

/* Only reset the client when the command was executed. */

// 执行命令

if (processCommand(c) == REDIS_OK)

resetClient(c);

}

}

}把收到的字符流按照redis protocol处理成redis能够理解的数据,即把数据由“泥巴”初步的加工成“砖坯”。

还有一个同类函数是processMultibulkBuffer()。

int processInlineBuffer(redisClient *c) {

char *newline;

int argc, j;

sds *argv, aux;

size_t querylen;

/* Search for end of line */

newline = strchr(c->querybuf,'\n');

/* Nothing to do without a \r\n */

if (newline == NULL) {

if (sdslen(c->querybuf) > REDIS_INLINE_MAX_SIZE) {

addReplyError(c,"Protocol error: too big inline request");

setProtocolError(c,0);

}

return REDIS_ERR;

}

/* Handle the \r\n case. */

if (newline && newline != c->querybuf && *(newline-1) == '\r')

newline--;

/* Split the input buffer up to the \r\n */

querylen = newline-(c->querybuf);

aux = sdsnewlen(c->querybuf,querylen);

argv = sdssplitargs(aux,&argc);

sdsfree(aux);

if (argv == NULL) {

addReplyError(c,"Protocol error: unbalanced quotes in request");

setProtocolError(c,0);

return REDIS_ERR;

}

/* Newline from slaves can be used to refresh the last ACK time.

* This is useful for a slave to ping back while loading a big

* RDB file. */

if (querylen == 0 && c->flags & REDIS_SLAVE)

c->repl_ack_time = server.unixtime;

/* Leave data after the first line of the query in the buffer */

sdsrange(c->querybuf,querylen+2,-1);

/* Setup argv array on client structure */

if (argc) {

if (c->argv) zfree(c->argv);

c->argv = zmalloc(sizeof(robj*)*argc);

}

/* Create redis objects for all arguments. */

for (c->argc = 0, j = 0; j < argc; j++) {

if (sdslen(argv[j])) {

c->argv[c->argc] = createObject(REDIS_STRING,argv[j]);

c->argc++;

} else {

sdsfree(argv[j]);

}

}

zfree(argv);

return REDIS_OK;

}处理命令的前期核验流程:

min-slaves-to-write,拒绝写请求;

slave-serve-stale-data是no,则只处理INFO和SLAVEOF命令;

int processCommand(redisClient *c) {

/* The QUIT command is handled separately. Normal command procs will

* go through checking for replication and QUIT will cause trouble

* when FORCE_REPLICATION is enabled and would be implemented in

* a regular command proc. */

// 单独处理quit命令,此时应该处理完收尾工作[如把replication工作]再退出

if (!strcasecmp(c->argv[0]->ptr,"quit")) {

addReply(c,shared.ok);

c->flags |= REDIS_CLOSE_AFTER_REPLY;

return REDIS_ERR;

}

/* Now lookup the command and check ASAP about trivial error conditions